Defending against autonomous, AI agent exploits

The Sysdig research team highlighted an autonomous, AI-orchestrated exploit where the threat actor went from initial access to administrative privileges in less than 10 minutes. This exploit is indicative of a marked departure from human operated exploits, with unprecedented automation and speed.

The writeup below is aimed to prepare defenders to formulate their defensive strategy against these exploits.

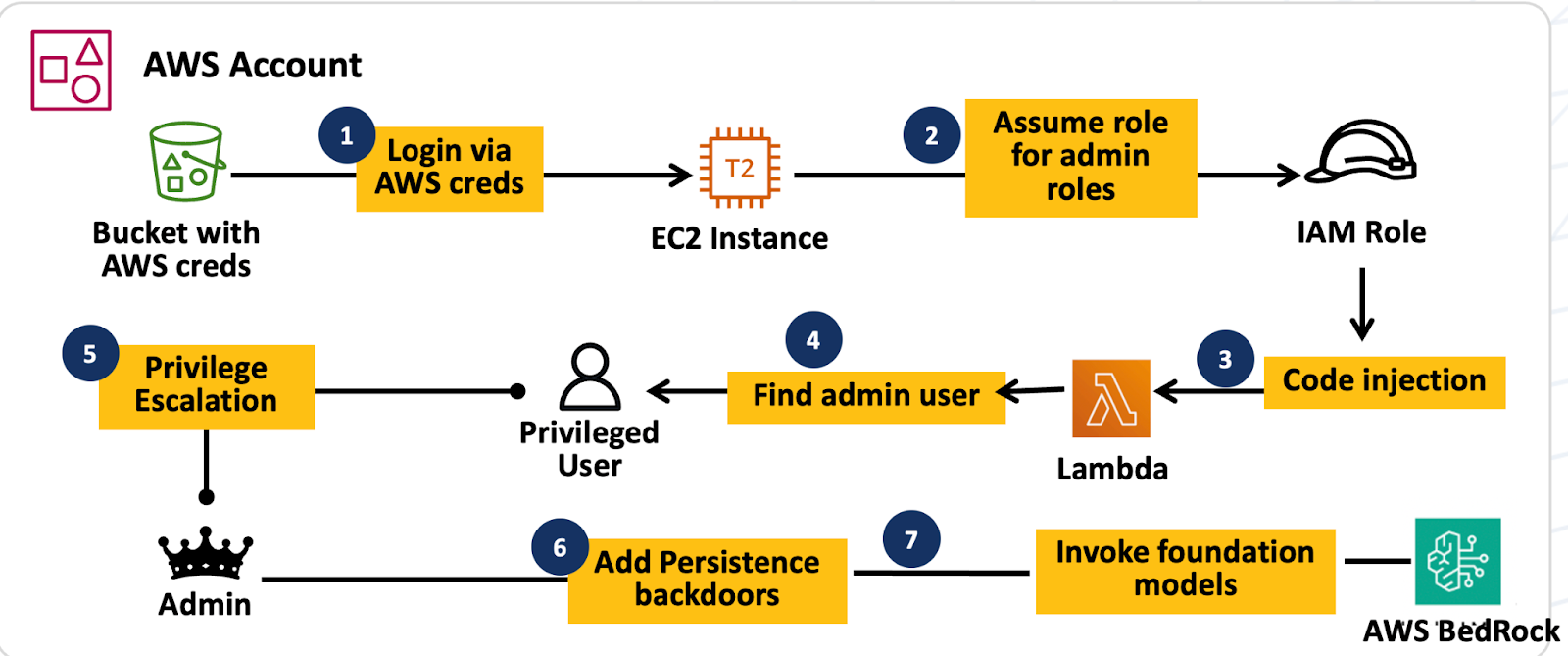

Anatomy of the attack - LLMjacking

This is an exploit targeting an organization that runs AI/LLM workloads in the cloud (AWS). AWS BedRock, a popular service providing foundation models, was the target of the exploit.

The goal of the attacker was to gain unauthorized access to AWS BedRock and launch GPU instances to train models. This exploit is known as LLMjacking, where attackers hijack AI infrastructure to gain access to the foundation models.

This is a multi-stage exploit, with the threat actor gaining initial access to the AWS environment by gaining access to validate credentials through a public S3 bucket. The attacker performed reconnaissance to find IAM roles with administrative permissions, gained access to these roles, performed code injection in AWS Lambda to gain administrative user permissions, established persistence, and launched LLM models in AWS BedRock.

LLM assisted multi-stage exploit

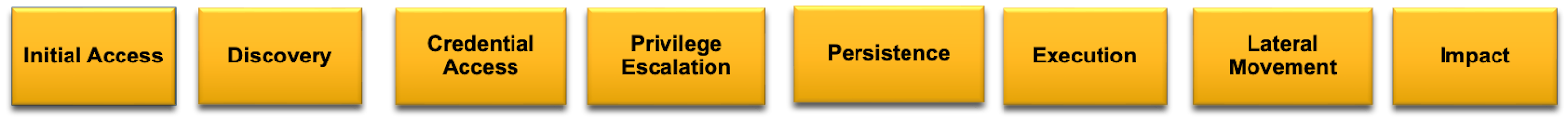

Analysis based on MITRE ATT&CK framework

The exploit sequence is mapped to the MITRE ATT&CK framework tactics. The exploit sequence is organized along these tactics.

What makes this attack different?

Cloud exploits, including stealthy exploits known as Living off the Cloud attacks, have risen in frequency. In previous iterations of these attacks, each step was typically performed by a human adversary. The stealthy nature of the multi-stage exploit resulted in elapsed time for the completion of the exploit, with the overall elapsed time often ranging from hours to days and sometimes even weeks.

This exploit, automated using AI, completed the multi-stage exploit sequence in less than 10 minutes. This speed is an order of magnitude faster than human exploits, even when performed by highly skilled human adversaries.

The level of automation and speed in AI-orchestrated attacks represents a marked departure from typical exploits.

Defender’s perspective: assume compromise

From a defender’s viewpoint, it is important to strengthen prevention controls as applicable and feasible. However, as this exploit indicates, the threat actor gained initial access through AWS credentials found in a public S3 bucket. An organization with a significant cloud estate would have a large number of pathways for a threat actor to gain initial access, such as the leaked credentials in this case. It is imperative for defenders to adopt an assume compromise security posture, one that operates under the principle that the adversary will gain access to the environment.

What makes threat detection challenging for AI-orchestrated attacks

Threat detection has traditionally focused around establishing a baseline of “normal” usage patterns and flagging deviations from the baseline as suspect, this is an anomaly-based detection approach. This approach is essentially reactive in nature, one that is based on the principle of observing a series of events and reacting to them to identify suspicious activity.

Cloud workloads tend to be dynamic and ephemeral, making the identification of a baseline difficult. As this AI-orchestrated exploit indicates, with the threat actor completing their mission in under 10 minutes, an attempt to establish a baseline and identify deviations within this rapidly shortened time window is highly challenging.

Defending against these rapidly executing threats needs an approach that is based on a proactive and preemptive security posture.

What is preemptive defense and what role does it play in defending against AI-orchestrated attacks?

Preemptive defense is a proactive approach to cyber defense, one that is based on the principle of anticipating threats and setting a defensive strategy before the threat occurs.

A foundational element of a preemptive defense strategy is based on cyber deception. Deception is based on the principle of anticipating a threat and setting traps at strategic locations of interest to the attacker. Any interaction with the traps is an immediate indicator of malicious activity, providing instant detection without requiring the identification of a baseline.

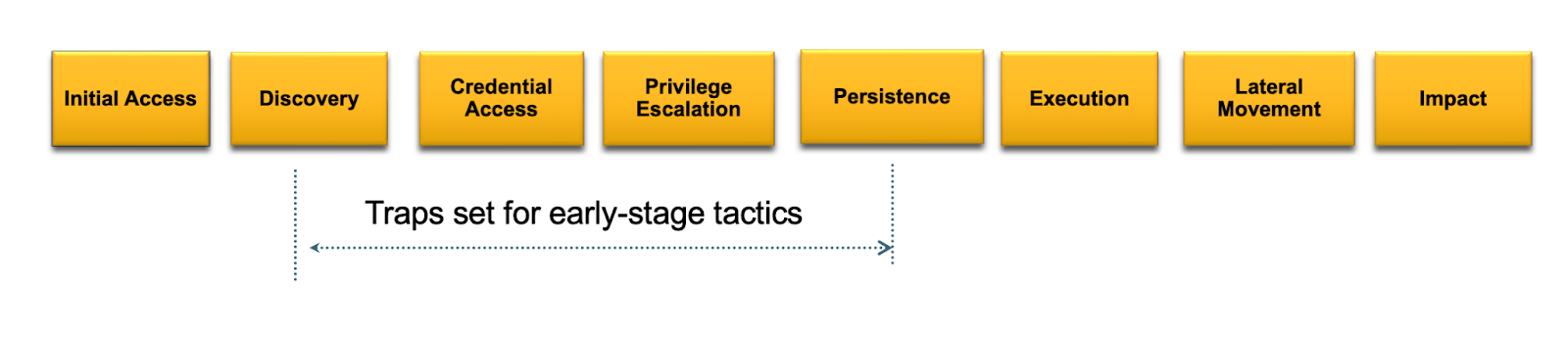

Deception design: setting traps for early-stage attack activity

An effective deception strategy is anchored on placing traps that are tailored to detect threats at early stages of the attack lifecycle. For the AI-orchestrated LLMjacking exploit, deception-based traps are set for MITRE tactics of Discovery, Credential Access, Privilege Escalation, and Persistence. The objective is to find and stop the attack before the exploit gets to the Execution or Impact stage, where the actual damage/breach occurs.

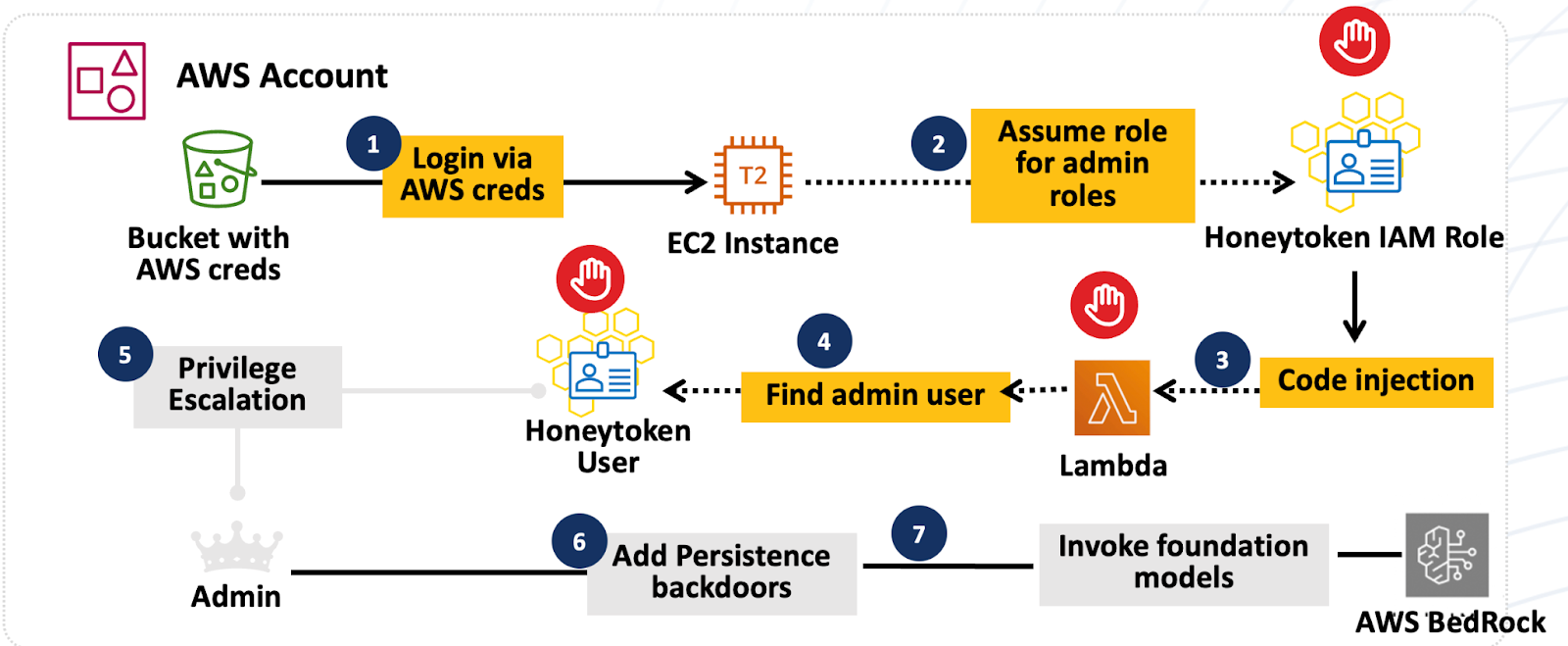

Deception types for AWS workloads

For an AWS environment, deceptions are deployed to represent entities of value to a threat actor or an AI agent. These include: account honeytokens that represent deceptive identities (IAM Roles and IAM Users), workload honeytokens that represent deceptive credential profiles (access keys and secrets in AWS Secrets Manager), and decoys that represent cloud resources (ec2 instances, S3 buckets, Lambda functions).

By deploying a tailored deception strategy for an AWS environment, the defender can find and stop the exploit at step 2 itself, at the stage of the AI agent attempting to assume a role that represents an administrative role.

Deception-based preemptive defense stops this exploit at step 2

Summary

The multi-stage exploit, orchestrated by AI, has dramatically reduced the exploit window to less than 10 minutes from initial access to administrative privileges. Preemptive defense is based on anticipating threats and setting traps to find the exploit at the early stages of the exploit sequence. Honeytokens representing deceptive IAM roles detect this AI agent exploit at step 2, during the IAM role enumeration and exploit phase.

Appendix: attack timeline

0:00:00 – The threat actor extracted credentials for IAM user compromised_user from public S3 buckets.

0:06:00 – Following initial enumeration, they failed to assume admin roles (admin, Administrator) but successfully assumed existing roles sysadmin, netadmin, and account.

———Deception detects the attack at this stage———

0:08:00 – They replaced the code of the Lambda function EC2-init and increased the timeout from 3 to 30 seconds. The function was modified three times, first targeting adminGH (which lacked admin privileges), then successfully compromising the admin user frick.

0:11:00 – Using the admin user frick, they created a new user backdoor-admin and attached the AdministratorAccess policy.

0:58:00-1:51:00 – They invoked Bedrock models 13 times, including Claude Sonnet 4, Claude Opus 4, Claude 3.5 Sonnet, Claude 3 Haiku, DeepSeek R1, Llama 4 Scout, Amazon Nova Premier, Amazon Titan Image Generator, and Cohere Embed v3. Invoking certain Claude models required calling AcceptAgreementRequest. They leveraged cross-Region inference throughout.

1:00:00 – They created access keys for users rocker (which had BedrockFullAccess policy) and AzureADRoleManager.

1:05:00-1:35:00 – Extensive reconnaissance across Secrets Manager, SSM, S3, ECS, Organizations, RDS, CloudWatch, Bedrock, OpenSearch Serverless, SageMaker, and KMS.

1:21:00 – Successfully assumed roles of sysadmin, developer, and external. Failed to assume EKS-access.

1:42:00 – Using backdoor-admin, they failed to run p5.48xlarge instances due to capacity constraints, then successfully launched a p4d.24xlarge instance. The instance was terminated after 5 minutes. They also shared its snapshot (SharedSnapshotVolumeCreated).

1:51:00 – The threat actor’s access was terminated and the attack ended.