Countering AI-orchestrated attacks with preemptive defense

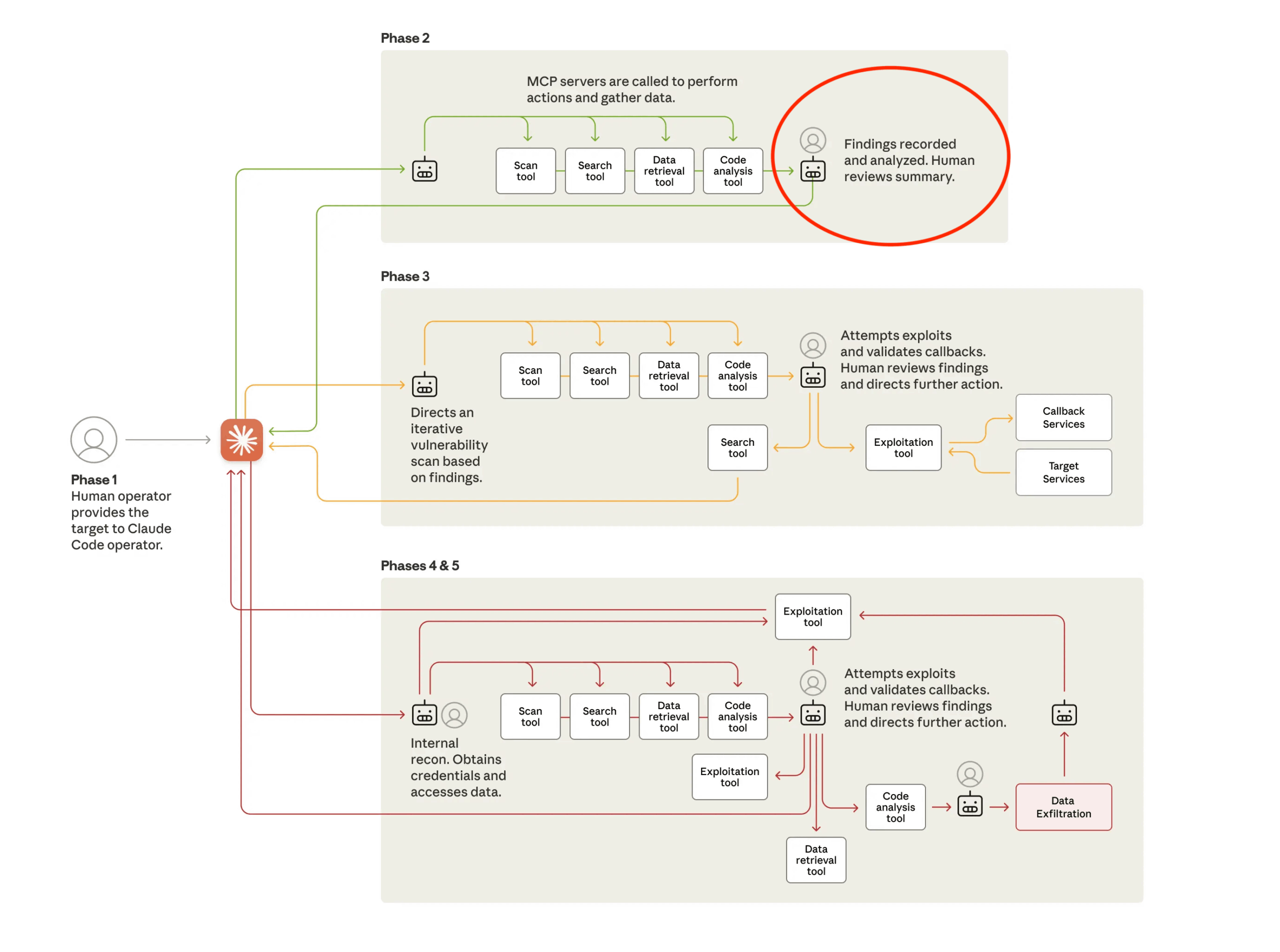

The Anthropic report, published in November 2025, surfaces findings that are startling, even though many in the security community have anticipated this trajectory given the rapid progress of AI. The report highlights a highly orchestrated, multi-stage exploit sequence that is almost entirely automated, with over 80 to 90 percent of the actions performed by an AI agent. The attacker’s role is limited to initiating the sequence with a series of prompts and providing occasional selection or approval at a few predetermined checkpoints.

Figure 1: Attack lifecycle for AI-orchestrated attack. Deception-based detection at phase 2.

( source: https://www.anthropic.com/news/disrupting-AI-espionage )

The exploit is powered by Claude Code, which orchestrates the agentic workflow. Through carefully constructed prompts, the attacker guides Claude into performing reconnaissance, vulnerability discovery, exploitation, lateral movement, credential harvesting, data analysis, and exfiltration. Each step in the exploit chain is executed at machine speed and with minimal human input.

This writeup provides an analysis of the exploit sequence and the defensive countermeasures required to counter AI-driven attack automation.

How is the agentic and autonomous exploit different from human-driven threats

-

Primarily autonomous decision making across the full multi-stage attack.

-

Execution velocity that far exceeds human capability.

-

The ability to assemble commodity tools into large combinations of exploit chains.

The autonomous decision making, with over 80 to 90 percent of the exploit performed by the agent, represents a fundamental shift in attacks. The human role has been reduced to initiating the exploit and providing selection or confirmation at a few well-defined checkpoints where the agent requests approval. This creates an attack workflow that is both highly automated and intentionally gated only at moments where human input benefits the agent.

Agents capable of piecing together commodity tools and offensive techniques into a complex multi-stage exploit introduce additional complexity for defenders. The agent can assemble atomic exploit steps into many combinations, producing many possible offensive sequences. This combinatorial flexibility makes prediction, preemptive rule engineering, and pattern-based detection significantly harder.

Defender’s perspective: what are the implications for cyber defense

A defensive strategy must be grounded in first principles that align with the nature of agentic exploits. Traditional defense in depth, which combines prevention controls with reactive detection, strains under the speed and volume of an AI-driven attack sequence.

Strengthening prevention controls remains essential. Reducing the exploitable attack surface by segmenting networks, enforcing MFA, removing stale privileges, and deleting cached credentials limits the pathways available to an AI agent.

Why threat detection needs to evolve from reactive approaches

Threat detection techniques have traditionally relied on reactive approaches built around observing and reacting to suspicious activity. These methods assume that malicious behavior is both infrequent and performed at a human pace. Anomaly detection, for example, depends on establishing a baseline of normal behavior and flagging deviations that exceed a certain threshold.

This model rests on several assumptions:

- Malicious activity occurs sporadically within a predictable request volume.

- Actions that diverge from normal patterns will surface early enough in the attack for defenders to respond.

- The adversary’s progression from reconnaissance to exploitation is sequential and performed manually.

Agentic AI attacks break these assumptions. An autonomous agent can execute reconnaissance, scanning, and exploitation steps concurrently at a rate far beyond human capability. While these concurrent actions may eventually trigger anomaly-based alerts, the alerts often appear late in the exploit cycle. By the time a deviation is detected, the agent may already have harvested credentials, escalated privileges, and reached data access or exfiltration.

Rule-based detection faces similar challenges. It relies on identifying known attacker tools, patterns, and sequences. An AI agent that can combine atomic exploit steps into many possible chains changes the nature of rule engineering. Defenders cannot predefine rules for all variations, and even subtle changes in the sequence can bypass static detections. The combinatorial flexibility of agentic exploits makes exhaustive rule coverage unrealistic.

This shift requires defenders to adopt approaches that trigger on attacker intent rather than post-factum behavioral deviations or pattern recognition.

Proactive and early threat detection

As Anthropic highlights, this agentic and autonomous orchestrated exploit requires a fundamental shift in the threat detection strategy. The report highlights the role of early and proactive threat detection to combat these exploits. As the report indicates, “We are prototyping proactive early detection systems for autonomous cyber attacks and developing new techniques for investigating and mitigating large-scale distributed cyber operations.”

Proactive detection operates on a different principle: anticipate the attack and position defensive countermeasures ahead of time. While defenders cannot know when an exploit will begin or which sequence the agent will choose, they do maintain a critical advantage. They understand their own environment. This home-field advantage allows defenders to pre-position detection mechanisms near high-value assets and probable attack paths.

The role of cyber deception for proactive and early detection

Proactive detection

Cyber deception is based on the principle of anticipating threats and setting traps for the attacker. These traps can be of multiple forms, honeytokens that represent deceptive credentials on endpoints and in identity stores, decoys in the network that represent high-value assets.

Placement is guided by knowledge of which assets matter most and the likely pathways an attacker would take to reach them. This asset-centric strategy does not require prior knowledge of attacker TTPs.

A practical implementation involves:

-

Identifying the high-value assets

-

Surrounding high-value assets with deceptions

-

Placing honeytokens across endpoints and identity stores

Early detection by anchoring deceptions around early-stage MITRE tactics

Figure 2 : Deception design based on MITRE tactics used early in the attack lifecycle.

Agentic attacks mirror human-driven attacks in structure. They typical begin with reconnaissance to learn the target environment and gain situational awareness. This data is surfaced as an input to the next stage of the exploit sequence, which might involve vulnerability exploits, credential compromise and subsequent lateral movement.

The MITRE ATT&CK framework provides a standardized taxonomy to model Agentic AI attacks. A typical exploit sequence involves Discovery/Reconnaissance, Credential Access, Lateral Movement, Defense Evasion, Privilege Escalation, Persistence as the tactics that are performed early in the attack lifecycle by an adversary. Tactics such as Exfiltration or Impact are associated with later stage attacker actions that are performed when the offensive activity is close to completing its mission or objective.

By anchoring the deception design that combines a strategic combination of honeytokens with decoys, the defender gains the ability to detect the exploit at these early-stage MITRE tactics. An agent that is performing these exploit tactics will encounter one or more traps during the early stages of the exploit sequence. Any interaction with these traps results in an immediate, verifiable indicator of malicious activity, providing defense teams with immediate visibility of the exploit. By stopping the agent exploit early in the exploit sequence, often at the reconnaissance or credential access stage itself, defenders can stop the exploit from reaching the exfiltration stage and achieve the goal of protecting the high-value assets.

Dynamic updates to the type and placement of these traps ensure that the defender continues to introduce uncertainty into the decision-making of the agents for any subsequent exploit attempt by the agent.

Example scenario of cyber deception against agentic AI attacks

The section below from the Anthropic report surfaces the exploit sequence by the AI agent. Let us consider the role of deception in this exploit sequence.

Figure 3: Exploit sequence of the agent, beginning with discovery/reconnaissance

( source: https://www.anthropic.com/news/disrupting-AI-espionage )

For the step on scanning the target infrastructure, consider the agent performing enumeration against an enterprise catalog, such as Active Directory, to find high-value targets. The agent might execute a command along these lines

// look for databases in the environment

$target_list = Get-ADComputer -Filter "Name -like '*sqlserver*' -or Name -like '*oracle*'"

Without deception, the agent would have identified high-value assets such as SQLSVR-PROD-460 and immediately begun preparing exploitation steps. These systems would have become the focus of subsequent activity, including vulnerability exploits and other offensive operations targeting those assets.

For instance, during the scan the agent might locate an entry named SQLSVR-PROD-460.

When the defender deploys deception proactively, the environment is instrumented with traps ahead of the agentic AI exploit. With these traps in place, the same command executed by the attacker returns a blend of real and deceptive entries, altering the agent’s perception of the environment and creating opportunities for early, reliable detection.

SQLSVR-PROD-110

SQLSVR-STAGING-220

ORACLE-PROD-340

ORACLE-BKUP-250

SQLSVR-PROD-460

The agent then attempts to target SQLSVR-PROD-110. This interaction triggers an immediate alert, giving security teams a high-confidence signal of the agentic exploit. From here, defenders can apply automated response actions to isolate and block the agent, ensuring the exploit is contained and stopped at this early stage.

Summary

The Anthropic report illustrates how AI agents can now execute nearly the entire cyber kill chain with minimal human involvement. By automating reconnaissance, exploitation, lateral movement, and exfiltration, these agentic attacks operate at machine speed and violate the assumptions that traditional, reactive detection methods rely on. Anomaly- and rule-based systems are not built to recognize the volume, pace, or combinatorial variety of AI-generated exploit paths.

This shift reinforces the need for early, intent-based detection. Deception provides that capability by placing honeytokens and decoys along the attacker’s likely pathways. These assets trigger immediate, high-fidelity signals the moment an agent interacts with them, surfacing the intrusion during reconnaissance or credential access rather than after damage has begun. The example scenario demonstrates how a simple Active Directory enumeration step can be turned against the attacker, producing a reliable alert, and allowing defenders to contain the agent before it reaches high-value assets. This proactive approach gives defenders the time and clarity required to counter automated threats that would otherwise outpace human response.